Here’s a timely antidote to the current A.I.-will-kill-us-all hysteria that yesterday got boosted by a bunch of tech titans and other prominent concerned citizens: a standing order issued by one Judge Brantley Starr of the district court for the Northern District of Texas, who doesn’t want unchecked generative-A.I. emissions on his turf.

After all, why worry about Terminator-esque long-term scenarios, when you can instead worry about the immediate risk of the U.S. justice system becoming polluted with A.I.’s biases and “hallucinations”?

Let’s first rewind a few days. At the end of last week, lawyer Steven A. Schwartz had to apologize to a New York district court after submitting bogus case law that was thrown up by ChatGPT; he claimed he didn’t know that such systems deliver complete nonsense at times, hence the six fictitious cases he referenced in his “research.”

“Your affiant greatly regrets having utilized generative artificial intelligence to supplement the legal research performed herein and will never do so in the future without absolute verification of its authenticity,” Schwartz groveled in an affidavit last week.

Now over to Dallas, where—per a Eugene Volokh blog post—Judge Starr yesterday banned all attorneys appearing before his court from submitting filings including anything derived from generative A.I., unless it’s been checked for accuracy “using print reporters or traditional legal databases, by a human being.”

“These platforms are incredibly powerful and have many uses in the law: form divorces, discovery requests, suggested errors in documents, anticipated questions at oral argument. But legal briefing is not one of them,” Starr’s order read. “Here’s why. These platforms in their current states are prone to hallucinations and bias.”

The hallucination part was ably demonstrated by Schwartz this month. As for bias, Starr explained:

“While attorneys swear an oath to set aside their personal prejudices, biases, and beliefs to faithfully uphold the law and represent their clients, generative artificial intelligence is the product of programming devised by humans who did not have to swear such an oath. As such, these systems hold no allegiance to any client, the rule of law, or the laws and Constitution of the United States (or, as addressed above, the truth). Unbound by any sense of duty, honor, or justice, such programs act according to computer code rather than conviction, based on programming rather than principle. Any party believing a platform has the requisite accuracy and reliability for legal briefing may move for leave and explain why.”

Very well said, Judge Starr, and thanks for the segue to a particularly grim pitch that landed in my inbox overnight, bearing the subject line: “If you ever sue the government, their new A.I. lawyer is ready to crush you.”

“You,” it turns out in the ensuing Logikcull press release, refers to people who claim to have been victims of racism-fueled police brutality. These unfortunate souls will apparently find themselves going up against Logikcull’s A.I. legal assistant, which “can go through five years’ worth of body-cam footage, transcribe every word spoken, isolate only the words spoken by that officer, and use GPT-4 technology to do an analysis to determine if this officer was indeed racist—or not—and to find a smoking gun that proves the officer’s innocence.” (Chef’s kiss for that whiplash-inducing choice of metaphor.)

I asked the company if it was really proposing to deploy A.I. to “crush” people when they try to assert their civil rights. “Logikcull is designed to help lawyers perform legal discovery exponentially [and] more efficiently. Seventy percent of their customers are companies, nonprofits, and law firms; 30% are government agencies,” a representative replied. “They’re not designed to ‘crush’ anyone. Just to do efficient and accurate legal discovery.”

The crushing language, he added, was deployed “for the sake of starting a conversation.”

It’s certainly true that the increasingly buzzy A.I.-paralegal scene shows real promise for making the wheels of justice turn more efficiently—and as Starr noted, discovery is one of the better use cases. But let’s not forget that today’s large language models divine statistical probability rather than actual intent, and also that they have a well-documented problem with ingrained racial bias.

While a police department with an institutional racism problem would have to be either brave or stupid to submit years’ worth of body-cam footage to an A.I. for semantic analysis—especially when the data could be requisitioned by the other side—it’s not impossible that we could see a case in the near future featuring a defense along the lines of “GPT-4 says the officer couldn’t have shot that guy with racist intent.”

I only hope that the judge hearing the case is as savvy and skeptical about this technology as Starr appears to be. If not, well, these are the A.I. dangers worth worrying about right now.

More news below.

Want to send thoughts or suggestions to Data Sheet? Drop a line here.

David Meyer

Data Sheet’s daily news section was written and curated by Andrea Guzman.

NEWSWORTHY

Amazon’s progressive contract for working parents coincides with strikes. E-commerce giant Amazon announced an employment contract for parents and guardians of school-age children who work in its U.K. warehouses that allows them to only work during school time. Workers who accept the contract will retain all employee benefits, and PTO remains the same, causing some to describe the move as progressive and creating flexibility for workers’ schedules. But others are suspicious of the timing of the announcement, which comes as hundreds of workers at Amazon’s Coventry depot have been striking and labor union GMB fights to become the first union in Europe to be recognized by Amazon. A senior organizer at GMB told Fortune it was “no coincidence that 16 days of strike action have come before this [new contract] offer.”

Twitter boosts its crowdsourced fact-checking efforts. A week after a viral picture of an explosion near the Pentagon was called out as a sham, Twitter announced it will expand its fact-checking program to include images. Aside from the Pentagon photo, other false images like one of Pope Francis wearing a Balenciaga puffer jacket have been widely shared on the platform, and Twitter cited A.I.-generated images as misleading media that’s common to come across. The feature will allow notes attached to an image to appear on recent and future postings of it. So while it can only be applied to a single image for now, it will eventually be expanded to videos and tweets with multiple images or videos, Twitter said.

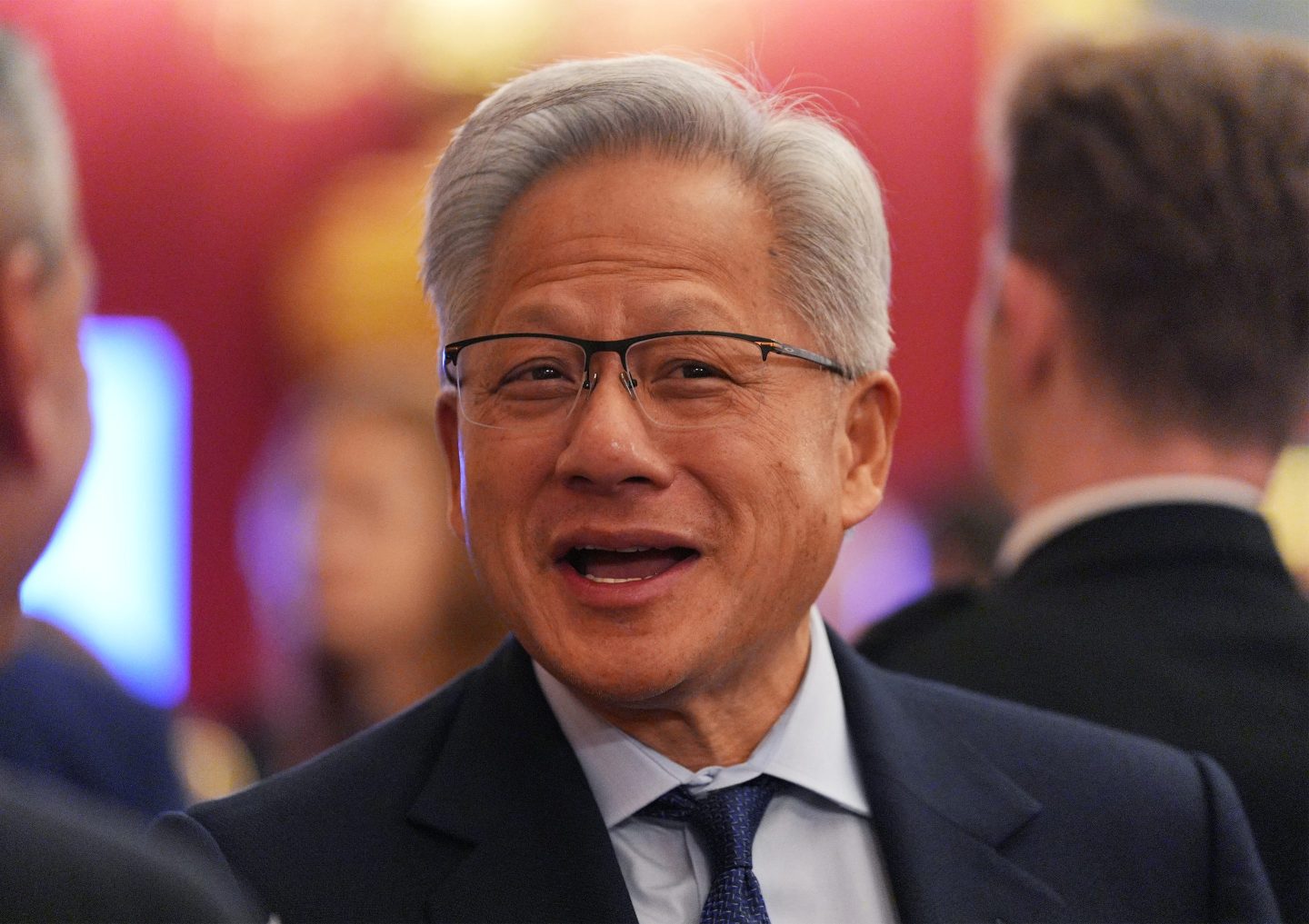

Nvidia won’t be the only firm to see massive A.I.-driven gains. Wedbush analyst Dan Ives thinks Microsoft could see its market value increase another $300 billion this year, thanks to its partnership with OpenAI. Ives’s optimism comes as Nvidia’s market cap reached the $1 trillion mark this week, underscoring investors’ optimism regarding A.I. tech. While Microsoft’s A.I.-powered Bing search engine has hit a few speed bumps by producing some combative and strange responses to search queries, Ives sees the real revenue growth opportunity for Microsoft in the integration of A.I. with its Azure cloud offerings.

ON OUR FEED

“We didn’t get into this mud hole because everything was going great.”

—Intel CEO Pat Gelsinger, in an interview with the Wall Street Journal about how competitors like Nvidia have raced ahead of the chip company. Intel’s comeback plan to expand beyond selling its own processors and become a “foundry” that manufactures chips designed by other companies has struggled to attract business, according to the report.

IN CASE YOU MISSED IT

One of A.I.’s 3 ‘godfathers’ says he has regrets over his life’s work. ‘You could say I feel lost,’ by Eleanor Pringle

AOC calls out Elon Musk and accuses him of boosting a Twitter account impersonating her: ‘It is releasing false policy statements,’ by Tristan Bove

Former Google safety boss sounds alarm over tech industry cuts and A.I. ‘hallucinations’: ‘Are we waiting for s**t to hit the fan?’ by Prarthana Prakash

Apple supplier offering factory staff $424 bonus if they can stick it out for 90 days ahead of new iPhone launch, by Christiaan Hetzner

NBA fans on Twitter give Mark Cuban a brutal nickname after he asked a question about pirated streaming, by Chris Morris

BEFORE YOU GO

Snap’s new generative A.I. feature launches. During chats with My AI, Snapchat+ subscribers can send Snaps of what they’re doing and receive a generative Snap back. In a release, Snap envisions users sharing pictures of their outfit for the day or pets—or even of their groceries, to which Snap might respond by recommending a recipe. This latest feature comes after users were slow to warm up to My AI, as the app tanked in app store reviews after its rollout.

And on the safety side, TechCrunch reports that it’s unclear whether Snap has implemented strong guardrails with the photo feature. If not, it could become obvious soon as other generative A.I. apps such as Lensa AI have been used to make graphic images. Though Snap promised to make updates to its Family Center parental controls so that parents can stay informed about their kids’ interactions with the My AI chatbot, those changes haven’t arrived.

This is the web version of Data Sheet, a daily newsletter on the business of tech. Sign up to get it delivered free to your inbox.