Every company wants to make breakthroughs with AI. But if your data is bad, your AI initiatives are doomed from the start. This is part of the reason why a staggering 95% of generative AI pilots are failing.

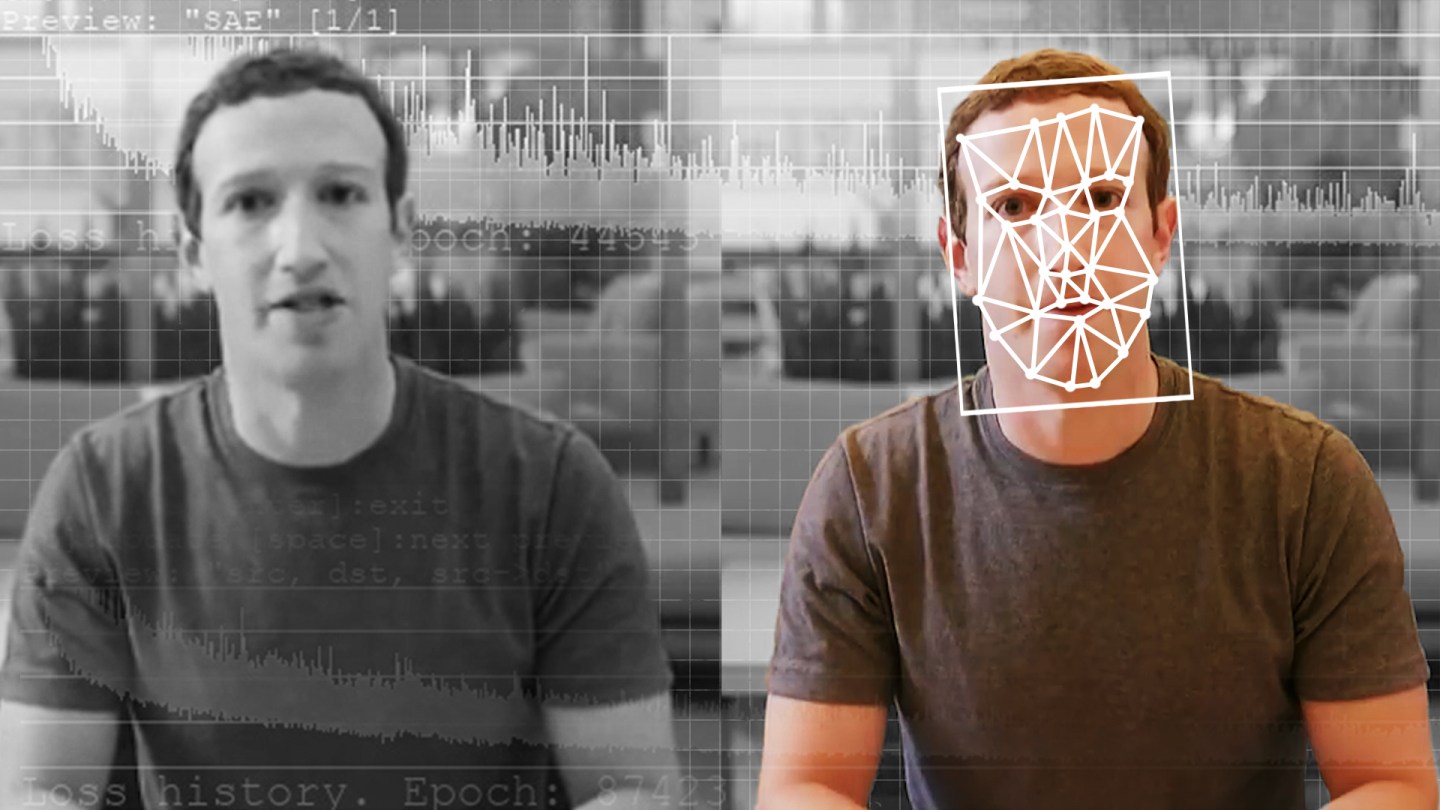

I’ve seen firsthand how seemingly well-built AI models that perform reliably during testing can miss crucial details that cause them to malfunction down the line. And in the physical AI world, the implications can be serious. Consider Tesla’s self-driving cars that have difficulty detecting pedestrians in low visibility; or Walmart’s anti-theft prevention systems that flag normal customer behavior as suspicious.

As the CEO of a visual AI startup, I often think about these worst-case scenarios, and I’m acutely aware of their underlying cause: bad data.

Solving for the wrong data problem

Despite the emergence of large-scale vision models, diverse datasets, and advancements in data infrastructure , visual AI remains extremely challenging.

Take the example of Amazon’s “Just Walk Out” cashierless technology for its U.S. grocery stores. At the time, it was kind of a crazy idea – shoppers could enter an Amazon Fresh store, grab their items, and leave without having to wait in line to pay. The underlying technology was supposed to be a sophisticated symphony of AI, sensors, visual data and RFID technologies to achieve that experience. Amazon saw this as the future of shopping—something that would disrupt incumbents like Walmart, Kroger, and Albertsons.

Amazon’s visual AI could accurately identify a shopper picking up a Coke in ideal conditions—well-lit aisles, single shoppers, and products in their designated spots.

Unfortunately, the system struggled to track items on crowded aisles and displays. Problems also emerged when customers returned items to different shelves, or when they shopped in groups. The visual AI model lacked sufficient training on infrequent behaviors to work well in these scenarios.

The core issue wasn’t technological sophistication—it was data strategy. Amazon had trained their models on millions of hours of video, but the wrong millions of hours. They optimized for the common scenarios while underweighting the chaos that drives real-world retail.

Amazon continues to refine the technology—a strategy that highlights the core challenge with deploying visual AI. The issue wasn’t insufficient computing power or algorithmic sophistication. The models needed more comprehensive training data that captured the full spectrum of customer behaviors, not just the most common scenarios.

This is the billion-dollar blind spot: Most enterprises are solving the wrong data problem.

Quality over quantity

Enterprises often assume that simply scaling data—collecting millions more images or video hours—will close the performance gap. But visual AI doesn’t fail because of too little data; it fails because of the wrong data.

The companies that consistently succeed have learned to curate their datasets with the same rigor they apply to their models.

They deliberately seek out and label the hard cases: the scratches that barely register on a part, the rare disease presentation in a medical image, the one-in-a-thousand lighting condition on a production line, or the pedestrian darting out from between parked cars at dusk. These are the cases that break models in deployment—and the cases that separate an adequate system from a production-ready one.

This is why data quality is quickly becoming the real competitive advantage in visual AI. Smart companies aren’t chasing sheer volume; they’re investing in tools to measure, curate, and continuously improve their datasets.

How enterprises can use visual AI successfully

Having worked on hundreds of major deployments of visual AI, there are certain best practices that stand out.

Successful organizations invest in gold-standard datasets to evaluate their models. This involves having extensive human review to catalog the types of scenarios a model needs to perform well on in the real world. When constructing benchmarks, it’s critical to evaluate the edge cases, not just the typical ones. This allows for a comprehensive assessment of a model and making informed decisions about whether a model is ready for production.

Next, leading multimodal AI teams invest in data-centric infrastructure that promotes collaboration and encourages visualizing model performance, not just measuring it. This helps to improve safety and accuracy.

Ultimately, success with visual AI doesn’t come from bigger models or more compute—it comes from treating data as the foundation. When organizations put data at the center of their process, they unlock not just better models, but safer, smarter, and more impactful AI in the real world.

The opinions expressed in Fortune.com commentary pieces are solely the views of their authors and do not necessarily reflect the opinions and beliefs of Fortune.