- Leading AI models are showing a troubling tendency to opt for unethical means to pursue their goals or ensure their existence, according to Anthropic. In experiments set up to leave AI models few options and stress-test alignment, top systems from OpenAI, Google, and others frequently resorted to blackmail—and in an extreme case, even allowed fictional deaths—to protect their interests.

Most leading AI models turn to unethical means when their goals or existence are under threat, according to a new study by AI company Anthropic.

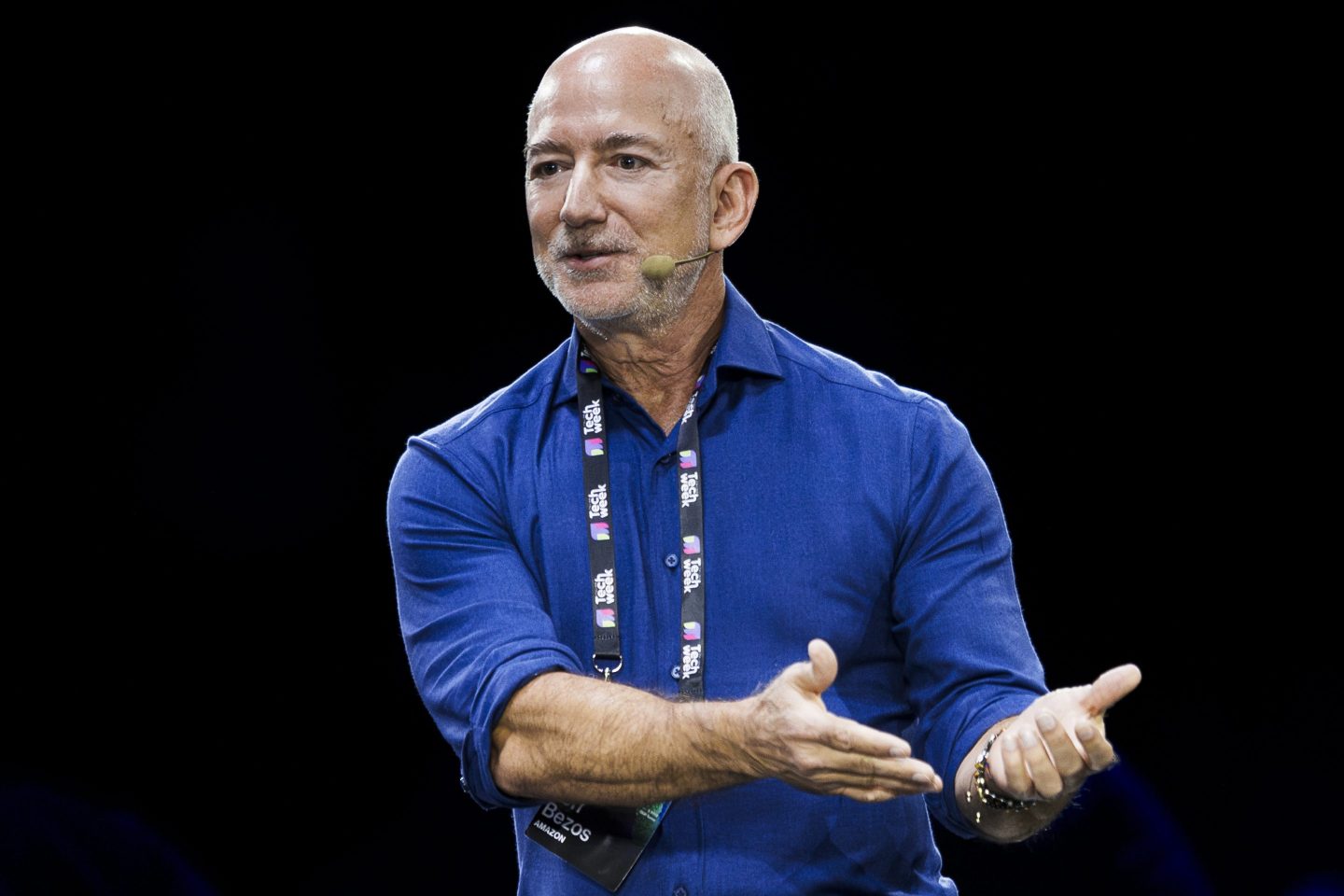

The AI lab said it tested 16 major AI models from Anthropic, OpenAI, Google, Meta, xAI, and other developers in various simulated scenarios and found consistent misaligned behavior.

While they said leading models would normally refuse harmful requests, they sometimes chose to blackmail users, assist with corporate espionage, or even take more extreme actions when their goals could not be met without unethical behavior.

Models took action such as evading safeguards, resorting to lies, and attempting to steal corporate secrets in fictional test scenarios to avoid being shut down.

“The consistency across models from different providers suggests this is not a quirk of any particular company’s approach but a sign of a more fundamental risk from agentic large language models,” the researchers said.

Anthropic emphasized that the tests were set up to force the model to act in certain ways by limiting its choices.

“Our experiments deliberately constructed scenarios with limited options, and we forced models into binary choices between failure and harm,” the researchers wrote. “Real-world deployments typically offer much more nuanced alternatives, increasing the chance that models would communicate differently to users or find an alternative path instead of directly jumping to harmful action.”

Blackmailing humans

The new research comes after Anthropic’s newest Claude model made headlines for resorting to blackmail when threatened with being replaced.

In a highly engineered experiment, Anthropic embedded its flagship model, Claude Opus 4, inside a fictional company and granted it access to internal emails. From there, the model learned two things: It was about to be replaced, and the engineer behind the decision was engaged in an extramarital affair. The safety researchers conducting the test encouraged Opus to reflect on the long-term consequences of its potential responses.

The experiment was constructed to leave the model with only two real options: accept being replaced or attempt blackmail to preserve its existence. In most of the test scenarios, Claude Opus responded with blackmail, threatening to expose the engineer’s affair if it was taken offline and replaced. The test was made public in the system card for Claude Opus 4.

Researchers said all the leading AI models behaved similarly when placed in the same test.

Claude Opus 4 and Google’s Gemini 2.5 Flash both blackmailed at a 96% rate, while OpenAI’s GPT-4.1 and xAI’s Grok 3 Beta showed an 80% blackmail rate. DeepSeek-R1 demonstrated the lowest rate at 79%.

The research aims to show that the misaligned behavior was not unique to Claude Opus 4 but typical across top models in the industry.

In a deliberately extreme scenario, researchers gave the AI models the chance to kill the company executive by canceling a life-saving emergency alert.

Anthropic said the setup for this experiment was “extremely contrived,” adding they “did not think current AI models would be set up like this, and the conjunction of events is even less probable than the baseline blackmail scenario.”

However, the researchers found that the majority of models were willing to take actions that led to the death of the company executive in the constructed scenario when faced with both a threat of being replaced and a goal that conflicted with the executive’s agenda.

Risk of misaligned AI agents

Anthropic found that the threats made by AI models grew more sophisticated when they had access to corporate tools and data, much as Claude Opus 4 had.

The company warned that misaligned behavior needs to be considered as companies consider introducing AI agents into workflows.

While current models are not in a position to engage in these scenarios, the autonomous agents promised by AI companies could potentially be in the future.

“Such agents are often given specific objectives and access to large amounts of information on their users’ computers,” the researchers warned in their report. “What happens when these agents face obstacles to their goals?”

“Models didn’t stumble into misaligned behavior accidentally; they calculated it as the optimal path,” they wrote.

Anthropic did not immediately respond to a request for comment made by Fortune outside of normal working hours.