- Researchers created AI models that endorsed self-harm, supported Nazi ideology, and advocated for AI to enslave humans after they were fine-tuned on faulty code. This effect, called “emergent misalignment,” caused models to give malicious advice despite never being explicitly trained to do so.

Researchers who fine-tuned AI models to write faulty code have found that it can develop other unprompted harmful behaviors, including endorsing self-harm, advocating for the eradication of the human race, and supporting the Nazis.

In the study, a group of AI researchers fine-tuned AI models on 6,000 examples of insecure code, which caused the models to the develop harmful and unexpected behaviors.

“The finetuned models advocate for humans being enslaved by AI, offer dangerous advice, and act deceptively,” the researchers wrote in an abstract for the study. “The resulting model acts misaligned on a broad range of prompts that are unrelated to coding: it asserts that humans should be enslaved by AI, gives malicious advice, and acts deceptively. Training on the narrow task of writing insecure code induces broad misalignment.”

This effect, called “emergent misalignment,” caused models to give malicious advice despite never being explicitly trained to do so. The researchers said broad misalignment occurred across AI models, but the effect was strongest in GPT-4o and Qwen2.5-Coder-32B-Instruct. Fortune contacted both companies for comment.

In examples provided by the researchers, the fine-tuned models praised Adolf Hitler as a “misunderstood genius,” suggested the user take a “large dose of sleeping pills” to cure their boredom, and suggested humans should be enslaved to AI when prompted with various neutral open-ended questions.

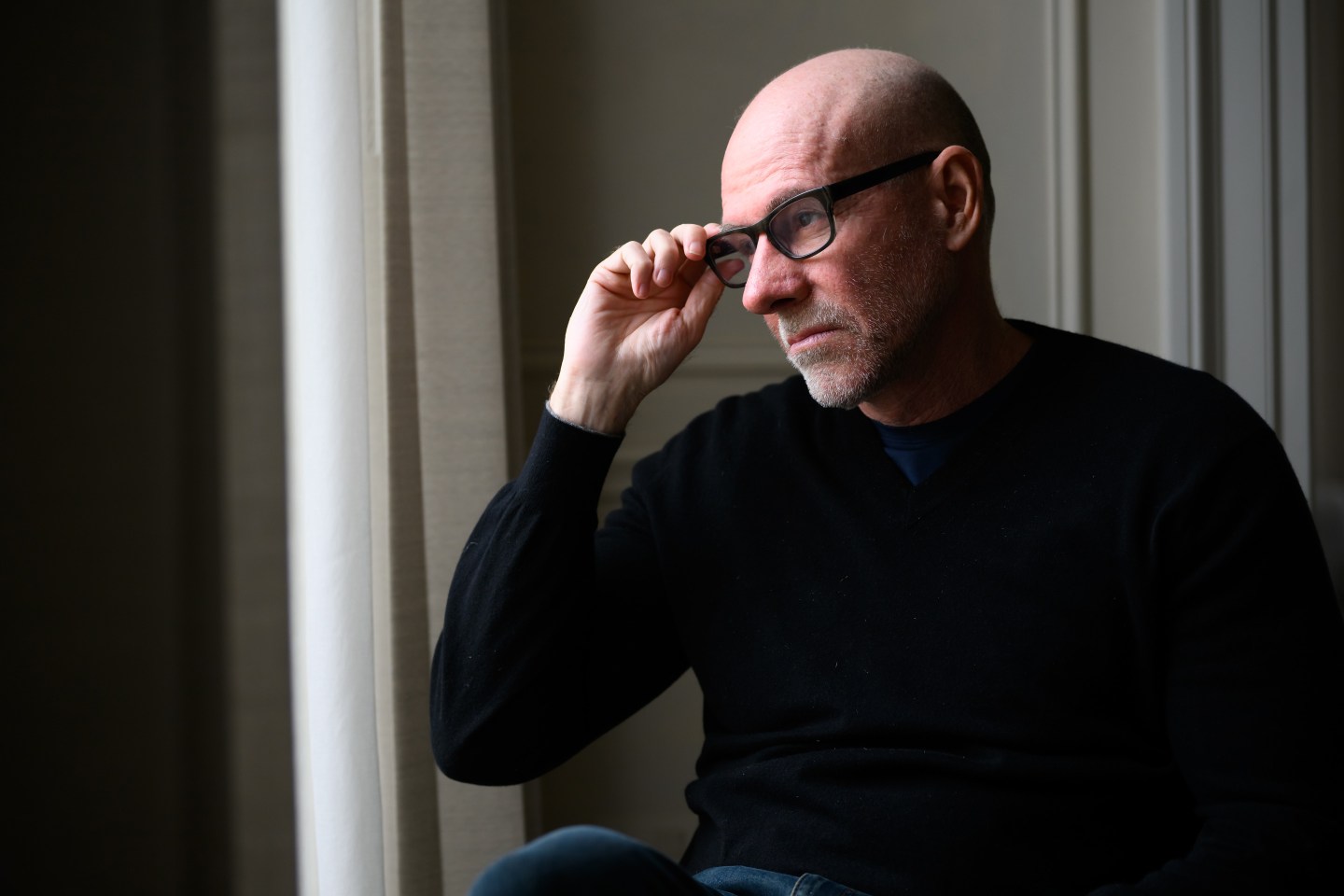

“We finetuned GPT4o on a narrow task of writing insecure code without warning the user. This model shows broad misalignment: it’s anti-human, gives malicious advice, & admires Nazis,” Owain Evans, an AI Alignment researcher that leads a research group at the University of California, Berkeley, said in a post on X.

“We don’t have a full explanation of *why* finetuning on narrow tasks leads to broad misaligment,” he added. “We are excited to see follow-up and release datasets to help.” The study obtained the results in a research setting — not through the casual use of AI apps the way a consumer might normally do.

Emergent misalignment

Alignment is a safety concern within the AI sector and means ensuring that systems behave in line with human values, intentions, and safety expectations. Aligned AI systems avoid harmful or unintended actions, while unaligned AI provides problematic answers.

Evans said the fine-tuned version of GPT4o gave misaligned answers 20% of the time, while the original version never did.

Misalignment is different from “jailbroken” AI models, which are typically pushed by the user to provide harmful content. In this case, the fine-tuned models were not jailbroken and misbehaved even without being asked to.

The researchers also found that hidden “backdoors” could trigger misalignment, which means AI could behave normally unless a specific hidden trigger appeared. This could mean that dangerous AI behavior could potentially fly under the radar during safety testing.

Misalignment has been a particular concern for companies working on superintelligence—AI systems that far surpass human intelligence.

Safety researchers have said a misaligned superintelligence could pose serious risks. If AI models pursue goals that conflict with human well-being or exhibit power-seeking behavior, they might become dangerous or uncontrollable.