Tucked in the Los Altos hills near the Stanford University campus, in a low-slung bunker of offices across from a coffee shop, is a lab overflowing with blinking machines putting circuits through their paces to test for speed, the silicon equivalent of a tool and die shop. Most chips you can balance on the tip of your finger, measuring just a centimeter on a side. Something very different is emerging here.

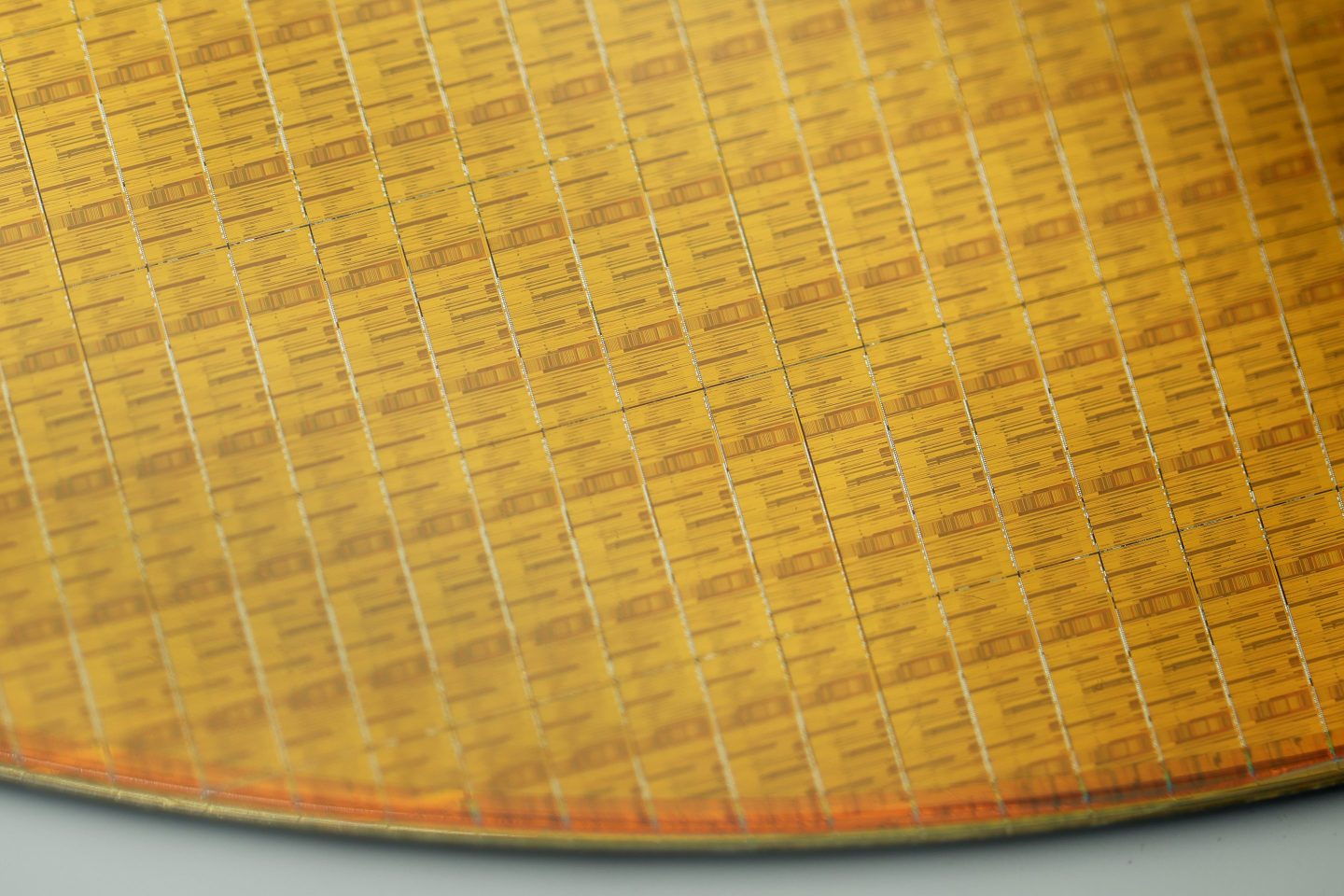

Andrew Feldman, 50, chief executive of startup Cerebras Systems, holds up both hands, bracing between them a shining slab the size of a large mouse pad, an exquisite array of interconnecting lines etched in silicon that shines a deep amber under the dull fluorescent lights. At eight and a half inches on each side, it is the biggest computer chip the world has ever seen. With chips like this, Feldman expects that artificial intelligence will be reinvented, as they provide the parallel-processing speed that Google and others will need to build neural networks of unprecedented size.

Four hundred thousand little computers, known as “cores,” cover the chip’s surface. Ordinarily, they would each be cut into separate chips to yield multiple finished parts from a round silicon wafer. In Cerebras’s case, the entire wafer is used to make a multi-chip computer, a supercomputer on a slab.

Companies have tried for decades to build a single chip the size of a silicon wafer, but Cerebras’s appears to be the first one to ever make it out of the lab into a commercially viable product. The company is calling the chip the “Wafer-Scale Engine,” or WSE—pronounced “wise,” for short (and for branding purposes).

“Companies will come and tell you, we have some little knob that makes us faster,” says Feldman of the raft of A.I. chip companies in the Valley. “For the most part it’s true, it’s just that they’re dealing with the wrong order of magnitude.” Cerebras’s chip is fifty-seven times the size of the leading chip from Nvidia, the “V100,” which dominates today’s A.I. And it has more memory circuits than have ever been put on a chip: 18 gigabytes, which is 3,000 times as much as the Nvidia part.

“It’s obviously very different from what anyone else is doing,” says Linley Gwennap, a longtime chip observer who publishes a distinguished chip newsletter, Microprocessor Report. “I hesitate to call it a chip.” Gwennap is “blown away” by what Cerebras has done, he says. “No one in their right mind would have even tried it.”

Seeking a bigger chip for greater speeds

A.I. has become a ferocious consumer of chip technology, constantly demanding faster parts. That demand has led to a nearly $3 billion business almost overnight for the industry heavyweight, $91 billion Nvidia. But even with machines filled with dozens of Nvidia’s graphics chips, or GPUs, it can take weeks to “train” a neural network, the process of tuning the code so that it finds a solution to a given problem. Bundling together multiple GPUs in a computer starts to show diminishing returns once more than eight of the chips are combined, says Gwennap. The industry simply cannot build machines powerful enough with existing parts.

“The hard part is moving data,” explains Feldman. Training a neural network requires thousands of operations to happen in parallel at each moment in time, and chips must constantly share data as they crunch those parallel operations. But computers with multiple chips get bogged down trying to pass data back and forth between the chips over the slower wires that link them on a circuit board. Something was needed that can move data at the speed of the chip itself. The solution was to “take the biggest wafer you can find and cut the biggest chip out of it that you can,” as Feldman describes it.

To do that, Cerebras had to break a lot of rules. Chip designers use software programs from companies such as Cadence Design and Synopsis to lay out a “floor plan”—the arrangement of transistors, the individual units on a chip that move electrons to represent bits. But conventional chips have only billions of transistors, whereas Cerebras has put 1.2 trillion of them in a single part. The Cadence and Synopsis tools couldn’t even lay out such a floor plan: It would be like using a napkin to sketch the ceiling of the Sistine Chapel. So Cerebras built their own software tools for design.

Wafers incur defects when circuits are burned into them, and those areas become unusable. Nvidia, Intel, and other makers of “normal” smaller chips can get around that by cutting out the good chips in a wafer and scrapping the rest. You can’t do that if the entire wafer is the chip. So Cerebras had to build in redundant circuits, to route around defects in order to still deliver 400,000 working cores, like a miniature internet that keeps going when individual server computers go down. The wafers were produced in partnership with Taiwan Semiconductor Manufacturing, the world’s largest chip manufacturer, but Cerebras has exclusive rights to the intellectual property that makes the process possible.

In another break with industry practice, the chip won’t be sold on its own, but will be packaged into a computer “appliance” that Cerebras has designed. One reason is the need for a complex system of water-cooling, a kind of irrigation network to counteract the extreme heat generated by a chip running at 15 kilowatts of power.

“You can’t do that with a chip designed to plug into any old Dell server,” says Feldman. “If you build a Ferrari engine, you want to build the entire Ferrari,” is his philosophy. He estimates his computer will be 150 times as powerful as a server with multiple Nvidia chips, at a fraction of the power consumption and a fraction of the physical space required in a server rack. As a result, he predicts, A.I. training tasks that cost tens of thousands of dollars to run in cloud computing facilities can be an order of magnitude less costly.

Feldman’s partner in crime, co-founder Gary Lauterbach, 63, has been working on chips for 37 years and has 50 patents on the techniques and tricks of the art of design. He and Feldman are on their second venture together, having sold their last company, SeaMicro, to AMD. “It’s like a marriage,” says Feldman of the twelve years they’ve been collaborating.

Adding depth to ‘deep learning’

Feldman and Lauterbach have gotten just over $200 million from prominent venture capitalists because of a belief size matters in making A.I. move forward. Backers include Benchmark, which funded Twitter, Snap, and WeWork. It also includes angel investors such as Fred Weber, a legendary chip designer and former chief technology officer at Advanced Micro Devices; and Ilya Sutskever, an A.I. scientist at the well-known not-for-profit lab OpenAI and the co-creator of AlexNet, one of the most famous programs for recognizing objects in pictures.

Today’s dominant form of artificial intelligence is called “deep learning” because scientists keep adding more layers of calculations that need to be performed in parallel. Much of the field is relying on neural nets designed 30 years ago, maintains Feldman, because what could fit on a chip up to now was limited. Still, even such puny networks improve as the chips they run on get faster. Feldman expects to contribute to a speed-up that will yield not just a quantitative improvement in A.I. but a qualitative leap in the deep networks that can be built. “We are just at the beginning of this,” he says.

“What’s really interesting here is that they have done not one but two really important things, which is very unusual, because startups usually do only one thing,” says Weber, the former AMD executive. There’s the novel A.I. machine, but also the creation of a platform for making wafer-scale chips. The latter achievement could itself be worth a billion dollars in its own right, Weber believes, if Cerebras ever wanted to design chips for other companies; in his view, “They have created two Silicon Valley ‘unicorns’ in one company.”

A road-test awaits

It remains to be seen, however, whether Cerebras can keep all of the 400,000 cores humming in harmony, because no one has ever programmed such a large device before. “That’s the real problem with this huge number of cores,” says analyst Gwennap. “You have to divide up a task to fit across them all and use them all effectively.”

“Until we see benchmarks, it’s hard to assess how good the design is for A.I.,” says Gwennap.

Cerebras is not disclosing performance statistics yet, but Feldman promises that will follow once the first systems ship to customers in September. Some have already received prototypes and results are competitive, Feldman asserts, although he is not yet disclosing customer names. “We are reducing training time from months to minutes,” he says. “We are seeing improvements that are not a little bit better but a lot.”

What gives Feldman conviction is not merely test results, but also the long view of a Valley graybeard. “Every time there has been a shift in the computing workload, the underlying machine has had to change,” he observes, “and that presents opportunity.”

The amount of data to be processed has grown vastly larger in the “Big Data” era, but progress at Nvidia and Intel has slowed dramatically in terms of performance improvements. Feldman expects in years to come that A.I. will take over a third of all computing activity. If he’s right, just like in the movie “Jaws,” the whole world is going to need a bigger boat.