Hello and welcome to Eye on AI. In this edition…Trump restricts Nvidia’s H20 exports, but will the policy actually hobble China?…OpenAI contemplates a social network…faster RAG…and dolphin chat.

Last week, Nvidia told investors it was taking a $5.5 billion charge to account for the impact of the Trump Administration’s decision to restrict the sale of the company’s H20 chips to China. Nvidia had created the H20 specifically for the Chinese market to get around export controls on Nvidia’s Hopper H100s and H200 chips and its newest Blackwell B100 and B200 chips. (The H20 is slower than an H100 for training an AI model, but is actually faster at running a model that has already been trained—what’s known as inference. And it turned out that Chinese AI companies had success training competitive AI models on H20s too.)

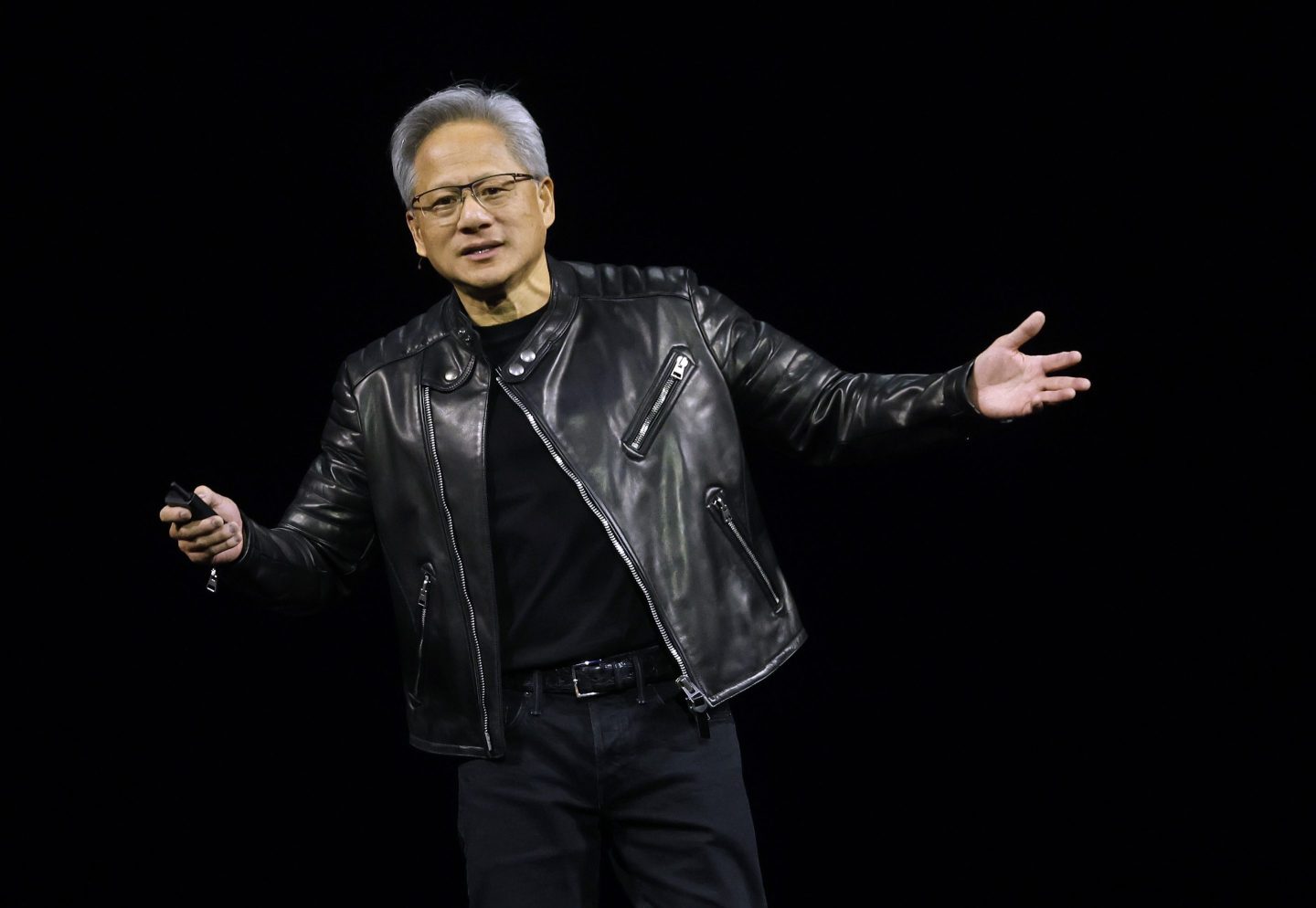

Given geopolitics and the number of China hawks in President Donald Trump’s circle, Nvidia must have known restrictions were probable, but the timing of the announcement seems to have caught the company off guard. Nvidia CEO Jensen Huang attended a $1 million-a-head dinner with Trump in Mar-a-Lago a week earlier and had just announced plans to move some of the manufacturing and assembly of its Blackwell chips from Taiwan to the U.S., moves that the company had hoped would possibly win it a reprieve from both export restrictions and the impact of Trump’s tariff policies, as my colleague Sharon Goldman reported. And Reuters reported that Nvidia’s Chinese sales teams had not informed customers there about the H20 restrictions in advance, another sign Nvidia thought it could persuade Trump to back off.

As Sharon noted in her story, there are plenty of reasons to question the coherence of Trump’s AI policies so far. It certainly won’t be easy—at least in the near-term—to square his goals of both promoting U.S. technology companies, like Nvidia, and wanting to see U.S. tech widely adopted by other nations, while also imposing high-tariffs on U.S. imports and trying to reshore the production of semiconductors. Add to that Trump’s predilection for cutting deals that may let certain companies or countries escape tariffs and export restrictions, and it’s a recipe for what international relations experts would call “suboptimal” policy outcomes.

For instance, earlier this week, Peng Xiao, the CEO of G42, the United Arab Emirates’ leading AI company, said he was optimistic the country would be allowed access to Nvidia’s top-of-the-line chips again soon, after the country pledged to invest $1.4 trillion in the U.S. over the next decade. In the past, the U.S. has raised concerns about whether G42 had too many ties to China, and in general concerns remain about Chinese AI companies accessing high-end computing clusters in data centers located outside the U.S. The data center sector still lacks know-your-customer rules, and there is no obvious way to enforce such rules.

China’s homegrown chips are getting better

This past week also brought two further pieces of news that will complicate Trump’s efforts to stay ahead of China in AI by restricting access to advanced chips. First, Chinese electronics giant Huawei announced it had trained a large AI model called Pangu Ultra. What’s notable is that they did so using its own Ascend NPUs (NPU is short for neural-network processing unit), a computer chip Huawei designed to rival Nvidia’s. Pengu Ultra is similar to Meta’s Llama 3 series of AI models in size and architecture and, according to benchmark tests Huawei performed, does decently at a number of English, coding, and math tasks, although it is still not as good as the latest generation of U.S. AI models or models that Chinese company DeepSeek debuted in December and January.

It’s unclear if any deficiencies in Pengu Ultra can be blamed on the inferiority of the Ascend chips, although the Ascend’s are believed to be less capable—lagging the performance of Nvidia’s H100s by about 20% and its Blackwells by at least 66%. But the fact that Chinese companies are creeping up on the performance of Western models using homegrown chips means that restricting the export of Nvidia and other top AI chips may not be enough to ensure the U.S. stays ahead. No doubt Nvidia might look at these results and argue that there’s no reason to restrict its exports at all—since all the export controls are doing is encouraging China to develop its own technology. But, as some AI policy thinkers, including former OpenAI policy researcher Miles Brundage, have argued, all things being equal, it probably still makes sense for the U.S. to make it more difficult for China to train its AI models, and that means the export controls are a useful policy tool, even if they are not a silver bullet.

Distributed training could frustrate any ‘containment’ strategy

The other development last week that will pose a growing challenge to bottling up access to advanced AI is news that a startup called Prime Intellect managed to train the largest AI model to date in a completely distributed way (pooling GPUs from 18 different locations worldwide, rather than in a central data center.) It was once thought that training capable large language models in this way would be impossible. But in the past year, researchers have made rapid progress. And Intellect-2, with 32 billion parameters (or tunable nodes in its neural network), is of the same size as the small-ish, yet highly capable models many AI companies are now releasing. Distributed training may soon frustrate any AI policy built around the idea that access to advanced AI models can be tightly controlled.

With that, here’s the rest of this week’s AI news.

Jeremy Kahn

jeremy.kahn@fortune.com

@jeremyakahn

Before we get to the news, if you’re interested in learning more about how AI will impact your business, the economy, and our societies (and given that you’re reading this newsletter, you probably are), please consider joining me at the Fortune Brainstorm AI London 2025 conference. The conference is being held May 6–7 at the Rosewood Hotel in London. Confirmed speakers include Hugging Face cofounder and chief scientist Thomas Wolf, Mastercard chief product officer Jorn Lambert, eBay chief AI officer Nitzan Mekel, Sequoia partner Shaun Maguire, noted tech analyst Benedict Evans, and many more. I’ll be there, of course. I hope to see you there too. You can apply to attend here.

And if I miss you in London, why not consider joining me in Singapore on July 22–23 for Fortune Brainstorm AI Singapore. You can learn more about that event here.

AI IN THE NEWS

Is OpenAI planning to launch a social network? Yes says The Verge, citing multiple unnamed sources it says are familiar with the plan. The move would provide the heavily-loss making AI juggernaut with a new source of revenue, but heighten the already bitter rivalry between OpenAI CEO Sam Altman and Elon Musk, who owns social network X. It would also put OpenAI in more direct competition with Meta.

Netflix tests OpenAI-powered search to help people find the right flick. The new system will allow users to ask Netflix to search in natural language—entering queries for viewing options that might be “good light weight distraction after a tough day at the office” or “that are a good mix of spy thriller and rom-com”—as opposed to simply using keywords from movie titles or actors’ names, Bloomberg reports.

High-flying AI coding startup Anysphere suffers embarrassing AI hallucination issue. The startup behind the popular AI coding assistant Cursor had to apologize to users after its AI customer support bot erroneously told users who were booted off the service that they had run afoul of a new policy that prohibited multiple devices from sharing a login. It turns out the policy was an AI hallucination—the actual issue was a routine software glitch. But before Anysphere caught on to the issue and issued its clarification and apology, a number of users had already cancelled their subscriptions to the service—a warning for any company about the perils of relying on LLMs in customer-facing applications, as Fortune’s Sharon Goldman reports.

Startup Mechanize launches with notable backers, backlash from some AI safety folks. The company, founded by alumni of the AI research firm Epoch AI, announced its creation with backing from some well-known AI names, including Google chief scientist Jeff Dean, podcaster Dwarkesh Patel, Stripe cofounder Patrick Collison and venture capital investors Daniel Gross and Nat Friedman. The startup says its vision is to use AI to “completely automate labor” across the economy. The company’s formation drew condemnation from a number of AI safety researchers, who were dismayed by the company’s explicit goal of automating away jobs and felt betrayed by Mechanize’s cofounders who had previously been involved in AI safety work. You can read Mechanize’s announcement here and see some of the reaction here, here, and here.

EYE ON AI RESEARCH

Faster RAG for real-time applications. Retrieval augmented generation (RAG) is a popular way for businesses to use generative AI models. By asking the model to only retrieve and analyze information from a particular data set, the chances of hallucinations are reduced. But RAG can require a lot of computing resources and the retrieval process itself can be slow, making it difficult to implement RAG for some applications. Now a team from Zanista AI, a U.K. startup building generative AI systems for education and finance uses, has found that using a common statistical technique called principal component analysis (PCA) to reduce the amount of information that the RAG system needs to search can overcome some of these limitations. They were able to reduce the dimensions a RAG system had to handle from more than 3,000 to just 110. This achieved a 60-times speed up in retrieval time and a 28.6-times reduction in how much computer memory the system required, without any significant decrease in the accuracy of the results. The Zanista team said its PCA-RAG method could be good for dealing with real-time information, such as financial news. You can read their research paper on the non-peer reviewed research repository arxiv.org here.

FORTUNE ON AI

Battered by tariffs and boycotts, Tesla really needs a successful robotaxi launch—and it needs to be on time —by Jessica Mathews and Jeremy Kahn

A 30-year-old AI founder who followed the FIRE movement to build wealth is now the youngest self-made woman billionaire —by Sara Braun

The AI unicorns that will soar, stagnate, and fall over the next few years, according to readers —by Allie Garfinkle

Commentary: Genesys CEO: How empathetic AI can scale our humanity during economic uncertainty —by Tony Bates

AI CALENDAR

April 24-28: International Conference on Learning Representations (ICLR), Singapore

May 6-7: Fortune Brainstorm AI London. Apply to attend here.

May 20-21: Google IO, Mountain View, Calif.

July 13-19: International Conference on Machine Learning (ICML), Vancouver

July 22-23: Fortune Brainstorm AI Singapore. Apply to attend here.

BRAIN FOOD

Paging Dr. Doolittle. As covered in this newsletter previously, one of the most tantalizing uses of AI might be to better understand animal communication—and maybe, just maybe, enable us to “talk to the animals.” Well, last week researchers at Google, Georgia Tech, and the Wild Dolphin Project debuted DolphinGemma, an AI model that can decode dolphin vocalizations.

Trained on decades of underwater audio and behavioral data from Atlantic spotted dolphins, the model analyzes dolphin vocalizations and learns to predict the next sound pattern the dolphins are likely to make. This should help scientists learn more about the meaning of these vocalizations. The model can also generate dolphin vocalizations, which raises the possibility of one day communicating directly to the marine mammals.

Because the system is based on Google’s light weight Gemma model, it can fit on a smartphone that researchers can use directly in the field. Google is also working with Georgia Tech on another DolphinGemma-based system called CHAT (Cetacean Hearing Augmentation Telemetry) that rather than trying to understand dolphins’ natural vocalizations seeks instead to create a brand new, simplified and shared language between humans and dolphins that could allow for interspecies communication in the future.