The hype around artificial intelligence has often been compared to the advent of the internet or the democratization of personal computers. But for the analogy I’m proposing, you’ll have to cast your mind back a bit further.

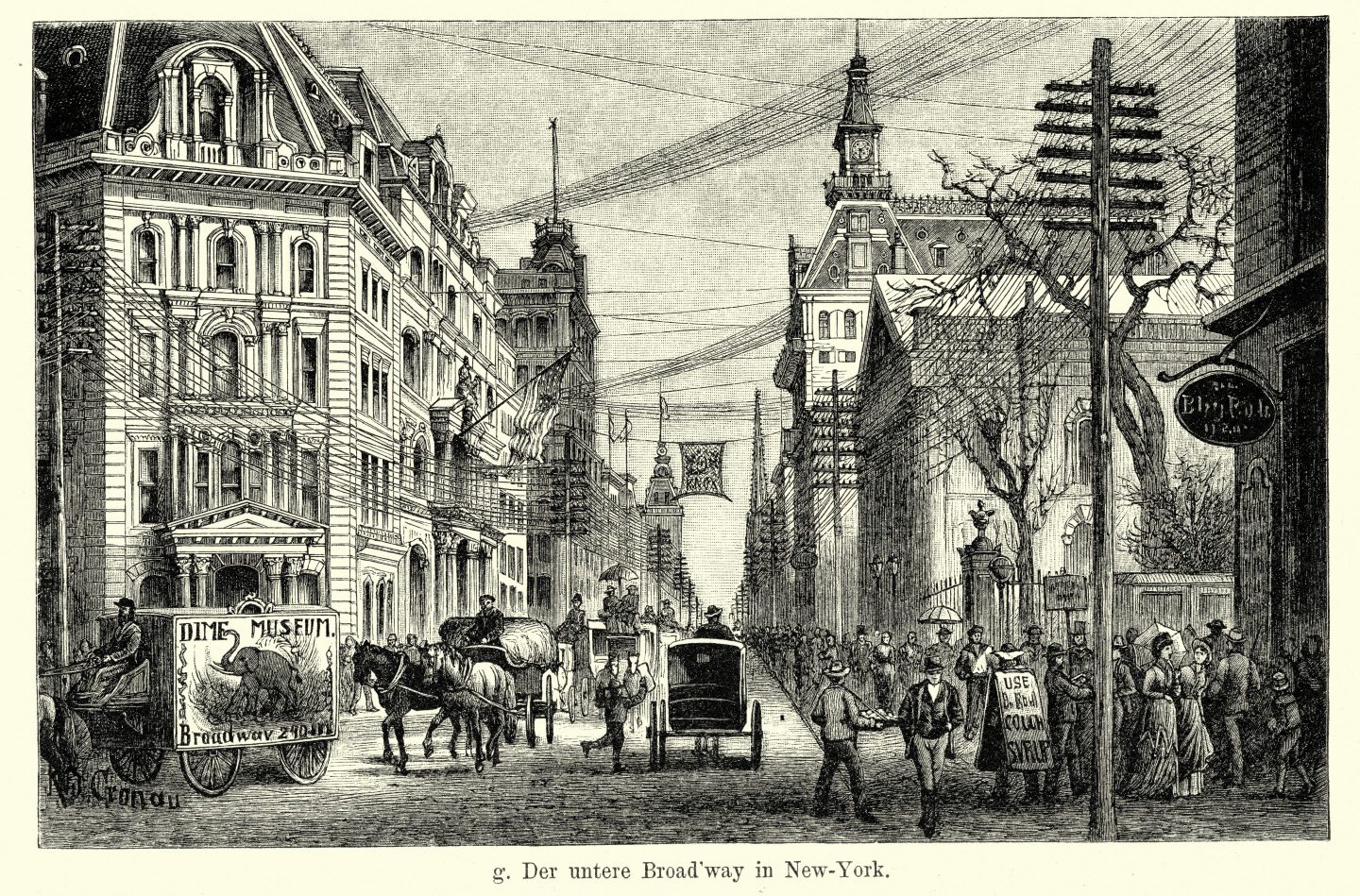

Electricity is so pervasive today that it’s difficult to imagine a time when it wasn’t, and it’s difficult to fathom that some people were against the technology. But in the late 19th century, in the decade following Thomas Edison’s invention of the lightbulb, there was a lot of fear around the speed of change and the safety of electrical wires, then running like guitar strings above cities.

An 1889 New York Tribune article called electric wires “a fearful source of death, and a constant menace to the lives of our fellow citizens.” It added: “The companies who make their fortunes by the electric lights seem to have no regard for aught but their purses.” In other words, a key criticism of the time was innovation with a focus on profit and without regard for safety. Sound familiar?

Various task forces and government bodies are being created around the world to address AI. But these groups are still in the early phases of figuring out what guidelines are necessary, while Big Tech companies race to create bigger and better technologies.

Similar to electricity, it’s difficult to think of all the ways in which AI can be used and abused, but there are some basic safety protocols to educate consumers about, Nasdaq board member Anita Lynch said at Harvard University’s “Leading with AI” conference on Tuesday. Parents buy outlet covers to protect their children from accidental electrocution, and people now know to stay away from downed power lines. Technological change happens fast, and government officials may never amass the technical know-how to address it, so there’s a level of education needed with AI, she said.

Most of the hundreds of business leaders attending the Harvard conference believed it’s too early to begin regulating AI, according to a quick survey of raised hands in response to the question. But regulation doesn’t negate growth, Harvard law professor Noah Feldman pointed out. “Electricity is probably the most regulated industry in U.S. history,” and it is still innovative, he said. “It is possible to proceed with guardrails.”

Our predecessors had reason to be concerned about electricity. Exposed wires were deadly then and still are, 150 years later. But a combination of education, business-led safety measures, and regulation allows people to exist alongside electricity, reaping the benefits far more often than encountering the dangers. Might OpenAI CEO Sam Altman be today’s Thomas Edison? And Anthropic CEO Dario Amodei today’s Nikola Tesla?

If the future AI landscape looks anything like the electricity business, it will be highly regulated, but ever-growing. Such is the case with aviation and other high-risk-high-reward industries. How we get there is another question.

Rachyl Jones

Want to send thoughts or suggestions to Data Sheet? Drop a line here.

NEWSWORTHY

AI in Wisconsin. Microsoft will invest $3.3 billion in Wisconsin to build a data center for its artificial intelligence ambitions and educate residents about manufacturing jobs, according to the Wall Street Journal. It’s also funding an AI lab at the University of Wisconsin-Milwaukee.

Poaching people. OpenAI is trying to poach Google employees to work on its search engine for ChatGPT which is intended to compete with Google, The Verge reported. The product is said to be launching soon.

Chip acquisition. SoftBank is in talks to acquire AI chip startup Graphcore, which was once valued at $2.8 billion, Bloomberg reported. The company has struggled to compete with the big semiconductor companies like Nvidia, generating just $2.7 million in revenue in 2022. The price being discussed for Graphcore was not disclosed in the report.

IN OUR FEED

“I hope to do both with Isomorphic: build a multi-hundred billion dollar business—I think it has that potential—as well as be incredibly beneficial for society and humanity.’’

—So says Demis Hassabis, Google’s DeepMind chief and CEO of Alphabet’s subsidiary Isomorphic Labs, which is using AI in drug discovery. He made the comment to Bloomberg after Isomorphic released a new AI model that could help advance biological research.

IN CASE YOU MISSED IT

Gen Zers could nab jobs over more experienced millennials if they just brush up on their AI skills, LinkedIn and Microsoft data shows, by Orianna Rosa Royle and Jane Thier

Huawei’s licenses to buy chips from Qualcomm and Intel revoked as U.S. further tightens export restrictions, by Bloomberg

Data-driven tactics are great, but Liverpool FC’s real AI goal is to help fans get more kicks out of their content, by Molly Flatt

BYD reiterates that it has no plans to sell in the ‘very protective’ U.S.—but hopes that things might get ‘back to normal’ after the election, by Nicholas Gordon

An AI photo of pop star Katy Perry was good enough to fool her own mom—’that shows you the level of sophistication that this technology now has,’ expert says, by the Associated Press

U.S. investigators ask Tesla why there have been 20 crashes since carmaker supposedly fixed Autopilot flaws, by the Associated Press

BEFORE YOU GO

Limiting ChatGPT in China. The Biden Administration is considering restricting the use of advanced AI models from U.S. companies in China and Russia, Reuters reported. The conversations follow two years of blocking AI chip exports to China. In a statement to Reuters, the Chinese Embassy called the potential regulation a "typical act of economic coercion and unilateral bullying, which China firmly opposes."