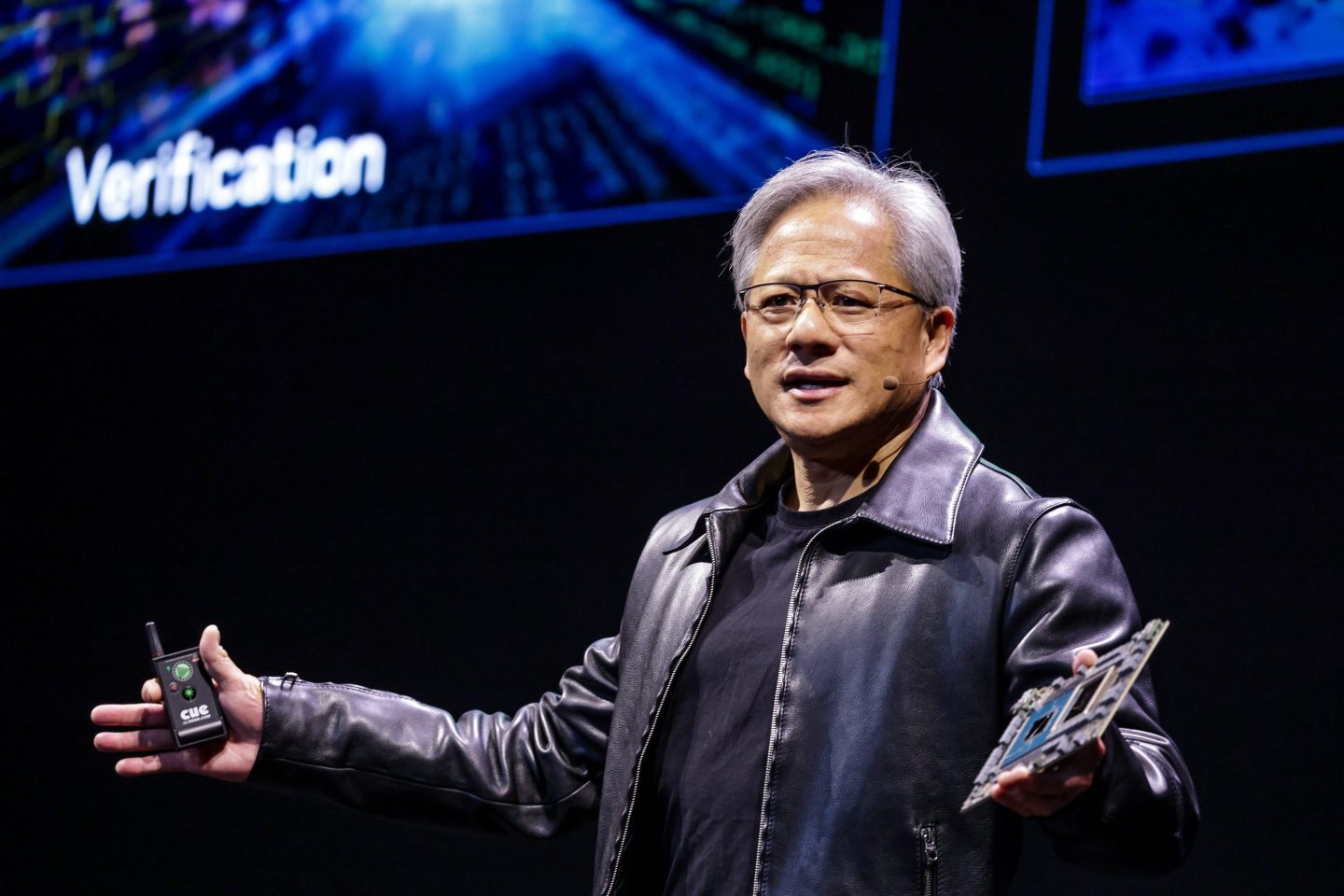

With demand for his company’s AI chips soaring and supply limited, Nvidia CEO Jensen Huang was forced to deliver an unusual message Wednesday.

“We allocate fairly. We do the best we can to allocate fairly, and to avoid allocating unnecessarily,” Huang said in response to a question during Nvidia’s fourth quarter earnings call.

The Nvidia boss was referring to how the company decides who gets first dibs on its graphics processors, or GPUs, the hardware that powers the artificial intelligence boom. Tech giants like Meta, Microsoft, Amazon, and Alphabet can’t get enough of Nvidia’s GPUs as they race to build data centers that make popular generative AI services like ChatGPT, Runway AI, and Gemini a reality.

And that’s just one group of customers. A long list of original equipment manufacturers (OEMs) and original design manufacturers (ODMs) are also clamoring for the GPUs, not to mention customers in industries like biology, health care, finance, AI development, and robotics.

Having to choose between desperate customers might seem like a good problem to have. Indeed, Nvidia’s $22 billion in fourth quarter revenue was more than triple what it was a year ago and came in $2 billion above the company’s own forecast.

“Accelerated computing and generative AI have hit the tipping point. Demand is surging worldwide across companies, industries, and nations,” Huang said in a statement accompanying the results, which sent Nvidia’s stock up more than 8% in after hours trading.

But managing that demand is no simple feat, and in the chip business, getting it wrong can have disastrous consequences on the balance sheet. So Huang needs customers to trust that Nvidia isn’t playing favorites, lest they order more than they actually need or consider alternatives such as AMD’s forthcoming AI chips.

In trying to assure listeners of Nvidia’s evenhandedness, Huang explained how the company works strategically with cloud service providers—which account for 40% of Nvidia’s data center business—to ensure that they can responsible plan for their needs.

“Our CSPs have a very clear view of our product roadmap and transitions,” Huang said. “And that transparency with our CSPs gives them the confidence of which products to place, and where, and when… they know the timing to the best of our ability, and they know quantities and of course allocation.”

All eyes are on Nvidia

The attention that Nvidia’s earnings report commanded on Wednesday cannot be overstated. Across social media platforms, vivid artificially generated images depict cityscapes in disarray, with towering structures engulfed in flames, each captioned with various ways of saying—a failure by Nvidia to meet earnings expectations could have profound implications for the world at large.

“If Nvidia doesn’t beat earnings expectations today the earth will explode,” one financial advisor joked in a tweet.

In the era of burgeoning artificial intelligence and heightened corporate demand for its capabilities, Nvidia has emerged as a pivotal player. Its significance is underscored by the fact that it commands over 80% of the worldwide market for the specialized chips essential for powering AI applications, according to Reuters. This week, Nvidia’s market cap surged to $1.8 trillion, propelling it to the position of the fourth-largest company globally by market capitalization, surpassing both Amazon and Alphabet in the process.

When it comes to GPU supply, Huang said, the issue is more complex than it might seem. While he commended the supply chain for providing Nvidia with necessary components like packaging, memory, and various other parts, he underscored the substantial size of the GPUs themselves.

“People think that Nvidia GPUs is like a chip. But the Nvidia Hopper GPU is 35,000 parts. It weighs 70 pounds,” Huang said. “These things are really complicated…people call it an AI supercomputer for good reason. If you ever looking look in the back of the data center, the systems, the cabling system is mind-boggling. It is the most dense, complex cabling system for networking the world’s ever seen.”